深度强化学习DQN(Deep Q-Learning)

本文最后更新于:August 3, 2021 am

DQN

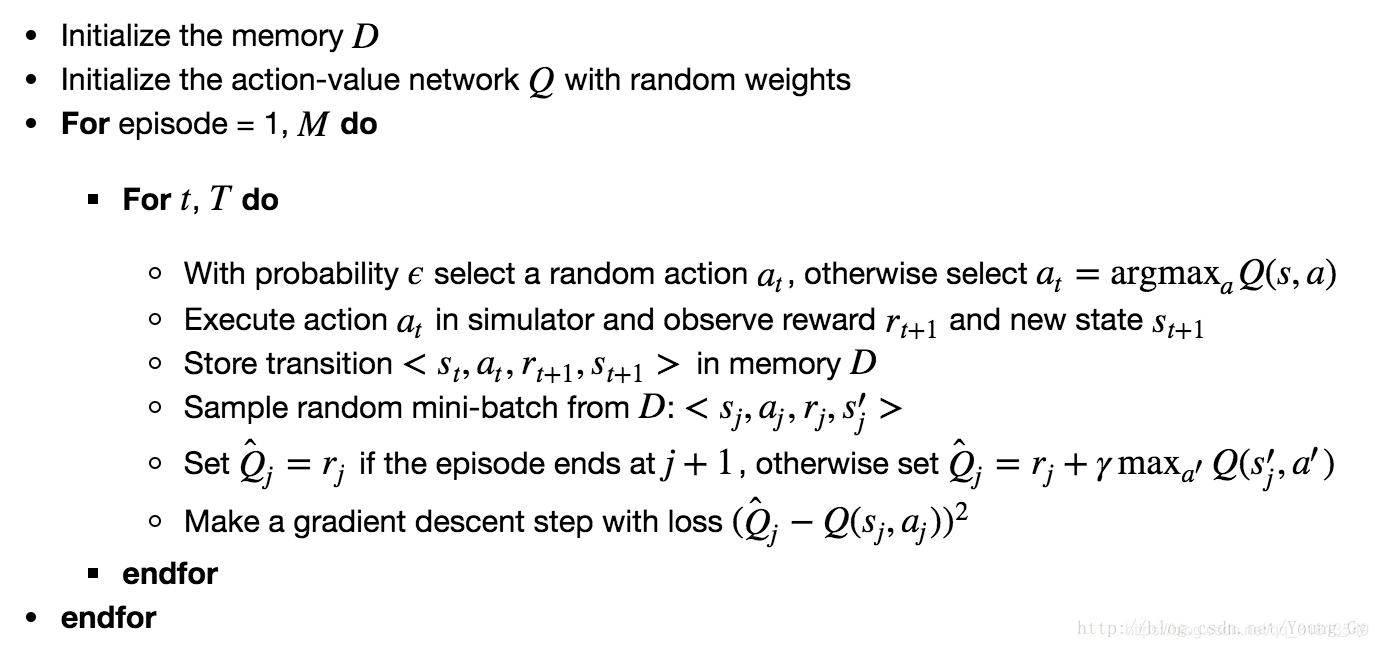

q-learning存在一个问题,真实情况的state可能无穷多,这样q-table就会无限大,解决这个问题的办法是通过神经网络实现q-table。输入state,输出不同action的q-value。

- q-leaning的执行过程:输出state,根据Q-Table输出不同action的q-value,根据探索利用策略,获取当前状态下采取的动作a.

- DQN的执行过程:输出state,根据Network输出不同action的q-value,根据探索利用策略,获取当前状态下采取的动作a.

## DQN的架构

- 通过记忆库,降低观测序列之间的相关性(强化学习由于state之间的相关性存在稳定性的问题,会极大的改变策略进而改变数据分布,解决的办法是在训练的时候存储当前训练的状态到记忆体M,更新参数的时候随机从M中抽样mini-batch进行更新.M 中存储的数据类型为 <s,a,r,s′>,M有最大长度的限制,以保证更新采用的数据都是最近的数据。)

- 通过Q-target的周期性固定,降低Q-target之间的相关性

基本架构:

随机抽取记忆库中的数据进行学习,打乱了经历之间的相关性,使得神经网络更新更有效率,Fixed Q-targets 使得target_net能够延迟更新参数从而也打乱了相关性。

- DQN中有两个神经网络(NN)一个参数相对固定的网络,我们叫做target-net,用来获取Q-目标(Q-target)的数值, 另外一个叫做eval_net用来获取Q-评估(Q-eval)的数值。

- 我们在训练神经网络参数时用到的损失函数(Loss function),实际上就是q_target 减 q_eval的结果 (loss = q_target- q_eval )。

- 反向传播真正训练的网络是只有一个,就是eval_net。target_net 只做正向传播得到q_target (q_target = r +γ*max Q(s,a)). 其中 Q(s,a)是若干个经过target-net正向传播的结果。

- 训练的数据是从记忆库中随机提取的,记忆库记录着每一个状态下的行动,奖励,和下一个状态的结果(s, a, r, s’)。记忆库的大小有限,当记录满了数据之后,下一个数据会覆盖记忆库中的第一个数据,记忆库就是这样覆盖更新的。

- q_target的网络target_net也会定期更新一下参数,由于target_net和eval_net的结构是一样的。更新q_target网络的参数就是直接将q_eval 的参数复制过来就行了。

DQN的算法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221# import lib

import gym

import tensorflow as tf

import numpy as np

# Create the Cart-Pole game environment

env = gym.make('CartPole-v0')

# Q-network

class QNetwork:

def __init__(self, learning_rate=0.01, state_size=4,

action_size=2, hidden_size=10,

name='QNetwork'):

# state inputs to the Q-network

with tf.variable_scope(name):

self.inputs_ = tf.placeholder(tf.float32, [None, state_size], name='inputs')

# One hot encode the actions to later choose the Q-value for the action

self.actions_ = tf.placeholder(tf.int32, [None], name='actions')

one_hot_actions = tf.one_hot(self.actions_, action_size)

# Target Q values for training

self.targetQs_ = tf.placeholder(tf.float32, [None], name='target')

# ReLU hidden layers

self.fc1 = tf.contrib.layers.fully_connected(self.inputs_, hidden_size)

self.fc2 = tf.contrib.layers.fully_connected(self.fc1, hidden_size)

# Linear output layer

self.output = tf.contrib.layers.fully_connected(self.fc2, action_size,

activation_fn=None)

### Train with loss (targetQ - Q)^2

# output has length 2, for two actions. This next line chooses

# one value from output (per row) according to the one-hot encoded actions.

self.Q = tf.reduce_sum(tf.multiply(self.output, one_hot_actions), axis=1)

self.loss = tf.reduce_mean(tf.square(self.targetQs_ - self.Q))

self.opt = tf.train.AdamOptimizer(learning_rate).minimize(self.loss)

# Experience replay

from collections import deque

class Memory():

def __init__(self, max_size = 1000):

self.buffer = deque(maxlen=max_size)

def add(self, experience):

self.buffer.append(experience)

def sample(self, batch_size):

idx = np.random.choice(np.arange(len(self.buffer)),

size=batch_size,

replace=False)

return [self.buffer[ii] for ii in idx]

# hyperparameters

train_episodes = 1000 # max number of episodes to learn from

max_steps = 200 # max steps in an episode

gamma = 0.99 # future reward discount

# Exploration parameters

explore_start = 1.0 # exploration probability at start

explore_stop = 0.01 # minimum exploration probability

decay_rate = 0.0001 # exponential decay rate for exploration prob

# Network parameters

hidden_size = 64 # number of units in each Q-network hidden layer

learning_rate = 0.0001 # Q-network learning rate

# Memory parameters

memory_size = 10000 # memory capacity

batch_size = 20 # experience mini-batch size

pretrain_length = batch_size # number experiences to pretrain the memory

tf.reset_default_graph()

mainQN = QNetwork(name='main', hidden_size=hidden_size, learning_rate=learning_rate)

# Populate the experience memory

# Initialize the simulation

env.reset()

# Take one random step to get the pole and cart moving

state, reward, done, _ = env.step(env.action_space.sample())

memory = Memory(max_size=memory_size)

# Make a bunch of random actions and store the experiences

for ii in range(pretrain_length):

# Uncomment the line below to watch the simulation

# env.render()

# Make a random action

action = env.action_space.sample()

next_state, reward, done, _ = env.step(action)

if done:

# The simulation fails so no next state

next_state = np.zeros(state.shape)

# Add experience to memory

memory.add((state, action, reward, next_state))

# Start new episode

env.reset()

# Take one random step to get the pole and cart moving

state, reward, done, _ = env.step(env.action_space.sample())

else:

# Add experience to memory

memory.add((state, action, reward, next_state))

state = next_state

# Training

# Now train with experiences

saver = tf.train.Saver()

rewards_list = []

with tf.Session() as sess:

# Initialize variables

sess.run(tf.global_variables_initializer())

step = 0

for ep in range(1, train_episodes):

total_reward = 0

t = 0

while t < max_steps:

step += 1

# Uncomment this next line to watch the training

env.render()

# Explore or Exploit

explore_p = explore_stop + (explore_start - explore_stop)*np.exp(-decay_rate*step)

if explore_p > np.random.rand():

# Make a random action

action = env.action_space.sample()

else:

# Get action from Q-network

feed = {mainQN.inputs_: state.reshape((1, *state.shape))}

Qs = sess.run(mainQN.output, feed_dict=feed)

action = np.argmax(Qs)

# Take action, get new state and reward

next_state, reward, done, _ = env.step(action)

total_reward += reward

if done:

# the episode ends so no next state

next_state = np.zeros(state.shape)

t = max_steps

print('Episode: {}'.format(ep),

'Total reward: {}'.format(total_reward),

'Training loss: {:.4f}'.format(loss),

'Explore P: {:.4f}'.format(explore_p))

rewards_list.append((ep, total_reward))

# Add experience to memory

memory.add((state, action, reward, next_state))

# Start new episode

env.reset()

# Take one random step to get the pole and cart moving

state, reward, done, _ = env.step(env.action_space.sample())

else:

# Add experience to memory

memory.add((state, action, reward, next_state))

state = next_state

t += 1

# Sample mini-batch from memory

batch = memory.sample(batch_size)

states = np.array([each[0] for each in batch])

actions = np.array([each[1] for each in batch])

rewards = np.array([each[2] for each in batch])

next_states = np.array([each[3] for each in batch])

# Train network

target_Qs = sess.run(mainQN.output, feed_dict={mainQN.inputs_: next_states})

# Set target_Qs to 0 for states where episode ends

episode_ends = (next_states == np.zeros(states[0].shape)).all(axis=1)

target_Qs[episode_ends] = (0, 0)

targets = rewards + gamma * np.max(target_Qs, axis=1)

loss, _ = sess.run([mainQN.loss, mainQN.opt],

feed_dict={mainQN.inputs_: states,

mainQN.targetQs_: targets,

mainQN.actions_: actions})

saver.save(sess, "checkpoints/cartpole.ckpt")

# Testing

test_episodes = 10

test_max_steps = 400

env.reset()

with tf.Session() as sess:

saver.restore(sess, tf.train.latest_checkpoint('checkpoints'))

for ep in range(1, test_episodes):

t = 0

while t < test_max_steps:

env.render()

# Get action from Q-network

feed = {mainQN.inputs_: state.reshape((1, *state.shape))}

Qs = sess.run(mainQN.output, feed_dict=feed)

action = np.argmax(Qs)

# Take action, get new state and reward

next_state, reward, done, _ = env.step(action)

if done:

t = test_max_steps

env.reset()

# Take one random step to get the pole and cart moving

state, reward, done, _ = env.step(env.action_space.sample())

else:

state = next_state

t += 1

env.close()

本博客所有文章除特别声明外,均采用 CC BY-SA 4.0 协议 ,转载请注明出处!